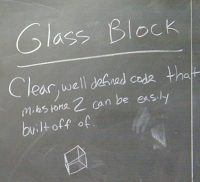

Preproduction

Some of Ron's students did preliminary research and design before summer, but it was during the summer semester that most of the design happened. I was not heavily involved in this stage: I did a bit of consulting with the design team, primarily on what scope was appropriate for my team to complete in the Fall. At the end of the summer, I was given a set of design notes, which I transcribed and interpreted into a design wiki. The original format was a PowerPoint slideument, which was wholly inappropriate for the task, in part because there was no way to tell by looking at the printed copy that some of the data tables exceeded the size of the page. In retrospect, I wish I had remembered to point the design team toward Stone LeBrande's One Page Design approach—not that they necessarily need one page designs, but it certainly would have gotten them thinking more critically about how to communicate this information effectively. Regardless, by copying the information into a structured design wiki, I was able to build my own mental model of the game, codify nomenclature, and identify missing pieces. As usual, I used Google Sites as a lightweight wiki. The fact that the design was essentially static meant that I didn't have to deal with refactoring or maintaining the wiki: it was primarily for structured presentation to the technical team. |

| Lead designer Michael Smith demonstrates the paper prototype to community partner Jeannie Regan-Dinnius of the Indiana Department of Natural Resources Division of Historic Preservation and Archaeology |

|

| Jeannie explores the physical prototype |

|

| RB368, before the start of the semester |

Team logistics

My team of twelve gathered on the first day of the semester to begin work. I had prepped them over email and as part of the application process, and we used the first week to talk about project management using Scrum and the basics of Unity3D. I had used both of these in my semester at the Virginia B. Ball Center for Creative Inquiry and felt comfortable applying them to this new project. We set up a task board and got to work, planning seven two-week sprints to bring us to a successful completion.The class was scheduled at 9AM Monday, Wednesday, and Friday, and this was when we held our "daily" stand-up meetings. The fact that many people had 10AM classes very quickly became an impediment, albeit an expected one. Unlike at the VBC, and unlike the fortunate scheduling of the Morgan's Raid Spring Team, these students had non-complementary schedules. As a result, there was very little whole-team collocated work outside of 9AM MWF. After-hours meetings were regularly initiated by the team, but I was rarely able to attend. Each student was on their honor to give nine hours of work per week to the project, but it would have been nicer to have more collocated time.

|

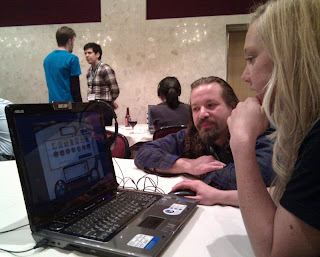

| The technical team hard at work |

After failing to complete any user stories in their first sprint, the technical team was able to pull itself up by its proverbial bootstraps and get productive. We conducted retrospectives at the end of each sprint to reflect on what was working and what wasn't working. The same issues tended to come up time and again, but I do feel like the team made progress in self-management and accountability during the semester. One of the perennial problems I have in dealing with students—which I suspect is a subset of dealing with humans—is their love of generality. Students will very gladly speak in platitudes and generalities, rather than nailing down specific people for specific problems. As a result, everyone tends to smile and nod and feel that a reflection was useful, but not necessarily hold each other accountable, since there's always a way to wiggle out. I got a copy of Patrick Kua's The Retrospective Handbook during the semester but have not had the chance to read it yet; I hope that I can find some practices within that I can bring to next semester's project (more on that in another post).

Managing and teaching

Managing these projects can be stressful. Each sprint, the team talks about trying to make steady progress, yet almost every sprint, it feels like there is no way they will complete their tasks on time. The team's retrospective notes show that they grow to desire steady progress. However, there is still a strong tendency to fall to the illusion of progress in the first half of the sprint, doing a lot of talking and hand-waving, and then rushing through the second half. As a result, validation was inconsistent, and tasks were rarely done done. Even at the end of the project, a major defect still existed in the build, and the response from the students working on this feature was, essentially, that the defect was enshrined in their implementation and we'd have to live with it. (NB: I fixed it in about half a day's work, but see the section on Expertise below.)The stress this semester was compounded by my nagging doubt that I was not spending enough time sitting with the students and creating software with them. In the three hours per week that I saw the whole team, my time was spent on project management: clarifying design ideas, coordinating communication, pulling on the artists for sketches, and barely having time to look over shoulders at source code before people ran off to their next meetings. My personal schedule did not permit my coming in to after-hours meetings, in large part because of the welcome addition of my third son. I needed to prioritize family over working extra hours, and I have no regrets about this! Yet, I cannot shake the feeling like there had to be a way to influence the students more strongly toward best practices. I had put together a team programming manual in an attempt to codify collaborative practice, and though this document was distributed before the semester and reviewed in the first week, it went almost completely unheeded. Actually, it was a bit worse than that: the students religiously followed the parts that made life easier and ignored the parts that actually would have made the implementation better. In particular, I borrowed two bits of Robert C. Martin's Clean Code:

- Don't use comments: they rot and indicate a failure of attention to careful design.

- Don't repeat yourself: replace copy-paste code with better abstractions.

I'm sure you can guess which one of those two they followed. Hint: we had a lot of copy-pasted code with no comments to explain it. To be clear, I am not blaming the students for being novices. I think that a major factor here is that they could not see the master working his craft, and so I fear that many did not learn some of the deeper lessons I hoped for them. My principle contrast here is with the Morgan's Raid Spring Team, a team in which I was embedded for about nine hours per week in Spring 2011.

A found several missed opportunities for good software design as I've worked directly with the code this week. One of the most egregious is in the handling of percentage tables. The core game design involves tables of outcomes determined by percent likelihood, in the vein of a tabletop wargame. Code like this can be found throughout the implementation:

Random rand = new Random();

float roll = rand.nextFloat();

if (roll >= 0.0f && roll <= 0.05) {

// do something

}

else if (roll > 0.05f && roll <= 0.10f) {

// do something else

}

// etc.

This happens a lot in the code, including the redundant lower-bounds checking. A simple table abstraction, with methods to add entries and compute percentages, would have made this much more readable and maintainable. I'm not sure how to help students perceive these affordances, but it's something I want to try to spend more time on in both CS315/515 and CS222, which is the prerequisite.

There were several times when I was able to push students in the direction of better design, and some of these came up as significant learning outcomes in our semester retrospective. I worked with students to incorporate the Builder pattern in the domain model and frequently assisted with functional decomposition. I also guided the team to use a formal state machine analysis of the game and then use the state design pattern to implement it; this change impacted the entire team for the good. These interactions, in which I guided students toward great ideas of software design, showed up positively in course evaluations and the semester retrospective.

|

| The team considers the task board and the state diagram |

|

| Dialog box design standards |

Working with clients and the community

Ron had a class at the same time as CS315/515, and so he could not attend our Sprint Review meetings. Knowing that we needed his feedback, we held Sprint Review Redux meetings at 10AM, and about four or five team members would stick around for it. It didn't come up until the course evaluations, but the students who stayed really valued this interaction. I had not thought much about the impact of this meeting on the students, primarily thinking of it as something I needed to ensure we were going in the right direction. After reading the evaluations, I realized that this such meetings are critical to the team's morale: these meetings helped them feel like valuable contributors to a bigger purpose rather than programming automata or hired help. I wonder if a different teaching schedule would have led to the team's being more consistent in completing tasks with highest quality, if they could have built more empathy for Ron's and the players' needs?The technical team conducted two external playtests of the game late in the semester, knowing that the design team had already tested the core mechanics. These were after-hours, and participation was encouraged but optional. I think that, as with the meetings with Ron, these meetings helped the team to understand better the context and impact of their work. The conventional use of such playtesting is to identify problems, but in this case, much of the design was done before my students got their hands on it. I think that the primary benefit here was not in usability, but in helping my students remember what elementary school students are like, and that there were real end users for this project.

|

| Team members Matt Brewer and Daniel Wilson playtesting at Motivate Our Minds |

On expertise

The game is scheduled to "ship" on December 31, coinciding with the end of the ESA grant. Long ago, I had promised Ron that I would help out during the break if there was anything left to be done, and that's what I've been doing this week. Some of the artists are still on contract to finish assets, and so I continue to direct their efforts while also integrating their work into the project, fixing defects, and adding features. Working intimately with my students' code has forced me to think about the nature of novices and experts.

So far this week, I have spent twenty hours working on the project. This is more than the amount of attention I asked my students to spend on the project in a whole sprint, based on the number of credit-hours they earned. There is no definitive ratio that says how much more productive an expert is than a novice, and I've encountered claims from five to twenty. If we take the conservative estimate for the sake of discussion, then we can say that I accomplished this week what I could expect one of my students to do in about eleven weeks of work, or roughly 2/3 of a semester. If we further consider the productivity costs of interruptions—students having to manage four or five other courses, jobs, and relationships, whereas I'm working from home and still relying on my wife to wrangle the boys—then I've easily done more than I could expect a single student could do in an entire semester.

Putting it in this perspective, while I am frustrated to see some low quality code in the project, I think it's important to still qualify the learning experience as a success. Some of them have only been programming for two years, and that has been while enrolled as a full-time student! The students certainly learned a lot about working on an interdisciplinary team, with all the joys and pains that come with leadership, accountability, trust, empathy, and professionalism. They got to build a real software system, large enough that they were able to watch it nearly collapse under the weight of their own bad decisions yet still have time to fix it. They saw the need for something better: for formal state-based analysis, for design patterns, for object-oriented and functional decomposition, for having high standards. They got to see how hard it is to actually make a game, and that tools like Unity3D only barely manage the complexity—there are no silver bullets. They worked in C#, which was a new experience for many of them, and they got a taste for how it is like Java and yet got to tinker with delegates and properties. Sounds like a win to me.

Personal conclusions

I took the better part of the day to write this reflection because I know that by writing it, I would be able to better articulate what I learned from this experience. I have divided my conclusions into two sections. First, the ones that we all already know to be true, but it's good to be reminded:

- Given the opportunity to make something significant, students will rise to the challenge.

- Having a dedicated space is critical.

- Collocation is important, especially across disciplines.

- Face-to-face communication is always preferable.

- Many of the outcomes of immersive learning would go unarticulated without making the time for reflection.

Here are some more specific notes that I should keep in mind:

- I should encourage future teams strongly toward one-page design documents, if for no other reason then to get them thinking about how best to communicate their designs.

- I need to notice when I perceive an affordance that my students do not. When this happens, I need to point out not just what action can be taken, but how I recognized it, so that they can learn to see them as well.

- I need to be conscientious about modeling professional behavior so that my students can learn to think, design, and communicate as professionals. Scheduling and executing code reviews is a good place to start.

- To improve the impact of reflections and retrospectives, I should find a way to encourage specificity and accountability.

- It is good to keep all the team members in regular contact with the community, including clients, playtesters, and other stakeholders.

- It is better if all team members share goals and motivations. More specifically, it's better if everyone is working for credit or for pay, not a mixture of both. This leads to conflicts of priority within the team, particularly with the natural rhythm of the semester.

- While I can lead a team in the production of someone else's design, I prefer leading teams of students through the holistic design process, from inspiration to finished product.

Current status

As of this writing, the game is in public beta and playable for free online. There is some work-in-progress art that comes up when you cross the Ohio River, but that should be replaced by the end of the day. A few team members have volunteered to do some QA over the break, and I believe all the defects have been ironed out.

|

| The game's main title screen |